Here’s how AI-powered autocompletion is implemented in Novel, an open-source text editor

In this article, we analyse how Novel implements AI-powered autocompletion in its beautiful editor.

Novel is an open-source Notion-style WYSIWYG editor with AI-powered autocompletion. This built on top of TipTap. Tiptap is the headless and open source editor framework. Integrate over 100+ extensions and paid features like collaboration and AI agents to create the UX you want.

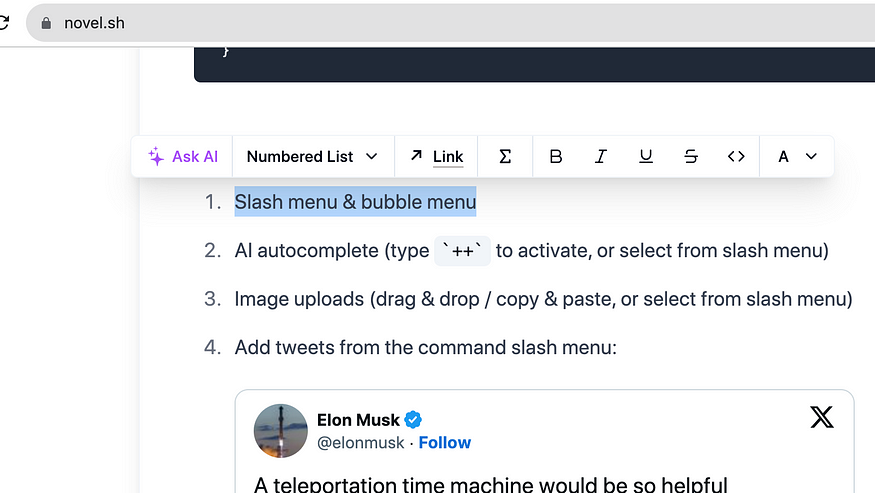

Since we are interested in learning about the AI-powered autocompletion, you first need to know where you see “Ask AI”. Open Novel and select some text using your mouse in the editor that is rendered by default. It can be any text. You will see this widget popup as shown below:

It is about the time we should find the code that is responsible for this widget. How to do that? Novel repository is a monorepo. it has a workspace folder named web in apps.

Since this is a Next.js based project and uses app router, in the page.tsx, a component named TailwindAdvancedEditor

export default function Page() {

return (

<div className="flex min-h-screen flex-col items-center gap-4 py-4 sm:px-5">

<div className="flex w-full max-w-screen-lg items-center gap-2 px-4 sm:mb-[calc(20vh)]">

<Button size="icon" variant="outline">

<a href="https://github.com/steven-tey/novel" target="_blank" rel="noreferrer">

<GithubIcon />

</a>

</Button>

<Dialog>

<DialogTrigger asChild>

<Button className="ml gap-2">

<BookOpen className="h-4 w-4" />

Usage in dialog

</Button>

</DialogTrigger>

<DialogContent className="flex max-w-3xl h-[calc(100vh-24px)]">

<ScrollArea className="max-h-screen">

<TailwindAdvancedEditor />

</ScrollArea>

</DialogContent>

</Dialog>

<Link href="/docs" className="ml-auto">

<Button variant="ghost">Documentation</Button>

</Link>

<Menu />

</div>

<TailwindAdvancedEditor /> // Here is the Editor component

</div>

);

}

This component named TailwindAdvancedEditor is imported from components/tailwind/advanced-editor.tsx, at line 123, you will find a component named GenerativeMenuSwitch. This is the widget you will see when you select some text in the Novel text editor.

<GenerativeMenuSwitch open={openAI} onOpenChange={setOpenAI}>

<Separator orientation="vertical" />

<NodeSelector open={openNode} onOpenChange={setOpenNode} />

<Separator orientation="vertical" />

<LinkSelector open={openLink} onOpenChange={setOpenLink} />

<Separator orientation="vertical" />

<MathSelector />

<Separator orientation="vertical" />

<TextButtons />

<Separator orientation="vertical" />

<ColorSelector open={openColor} onOpenChange={setOpenColor} />

</GenerativeMenuSwitch>

Generative Menu Switch

At line 41 in generative-menu-switch.tsx, you will find the code for “Ask AI”

{!open && (

<Fragment>

<Button

className="gap-1 rounded-none text-purple-500"

variant="ghost"

onClick={() => onOpenChange(true)}

size="sm"

>

<Magic className="h-5 w-5" />

Ask AI

</Button>

{children}

</Fragment>

)}

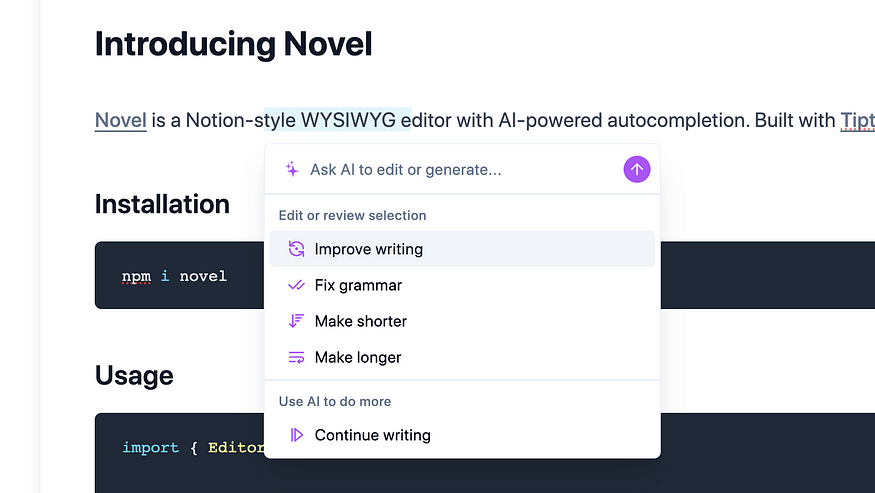

When you click on this “Ask AI” button, a new widget is shown.

This widget is rendered based on this condition shown below.

{open && <AISelector open={open} onOpenChange={onOpenChange} />}

{!open && (

<Fragment>

<Button

className="gap-1 rounded-none text-purple-500"

variant="ghost"

onClick={() => onOpenChange(true)}

size="sm"

>

<Magic className="h-5 w-5" />

Ask AI

</Button>

{children}

</Fragment>

)}

We need to analyse ai-selector.tsx at this point.

AI Selector

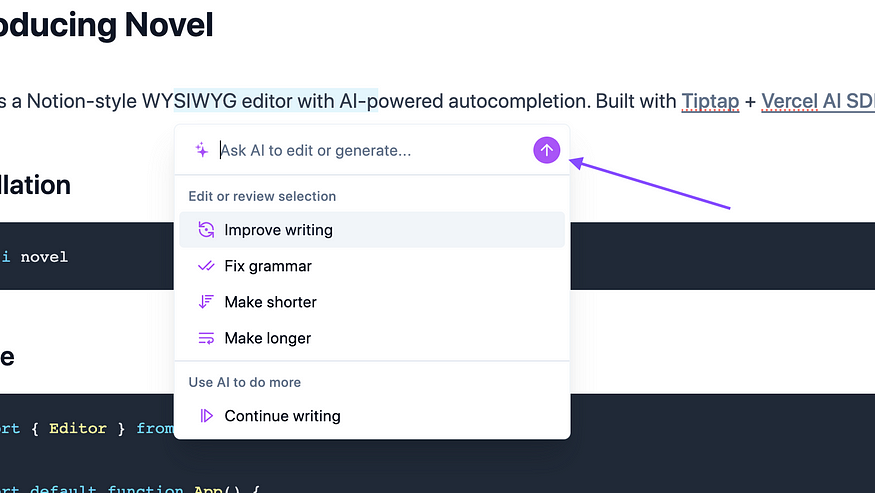

When you enter some input and submit that information, ai-selector.tsx has the code that handles this auto completion. When you click this ArrowUp button shown below:

There’s this onClick handler that calls a function named complete.

onClick={() => {

if (completion)

return complete(completion, {

body: { option: "zap", command: inputValue },

}).then(() => setInputValue(""));

const slice = editor.state.selection.content();

const text = editor.storage.markdown.serializer.serialize(slice.content);

complete(text, {

body: { option: "zap", command: inputValue },

}).then(() => setInputValue(""));

}

complete is returned by useCompletion hook as shown below

const { completion, complete, isLoading } = useCompletion({

// id: "novel",

api: "/api/generate",

onResponse: (response) => {

if (response.status === 429) {

toast.error("You have reached your request limit for the day.");

return;

}

},

onError: (e) => {

toast.error(e.message);

},

});

useCompletion is a hook provided by ai/react, and allows you to create text completion based capabilities for your application. It enables the streaming of text completions from your AI provider, manages the state for chat input, and updates the UI automatically as new messages are received.

This rings a bell for me, you will find similar api endpoint configuration to be made in CopilotKit to integrate AI capabilities into your system. I read about configuring an endpoint in CopilotKit quick start.

/api/generate Route

In this file named api/generate/route.ts, you will find a lot of things happening. I will try to provide an overview of what this route does.

- There is a POST Method and it configures OpenAI

export async function POST(req: Request): Promise<Response> {

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

baseURL: process.env.OPENAI_BASE_URL || "https://api.openai.com/v1",

});

- Ratelimit is applied based on the IP address using [Upstash rate limiting strategy](https://github.com/upstash/ratelimit-js)

if (process.env.KV_REST_API_URL && process.env.KV_REST_API_TOKEN) {

const ip = req.headers.get("x-forwarded-for");

const ratelimit = new Ratelimit({

redis: kv,

limiter: Ratelimit.slidingWindow(50, "1 d"),

});

const { success, limit, reset, remaining } = await ratelimit.limit(`novel_ratelimit_${ip}`);

if (!success) {

return new Response("You have reached your request limit for the day.", {

status: 429,

headers: {

"X-RateLimit-Limit": limit.toString(),

"X-RateLimit-Remaining": remaining.toString(),

"X-RateLimit-Reset": reset.toString(),

},

});

}

}

From lines 45–123, you will find prompt messages configured.

You feed that message to the Open AI API at Line 125

Finally response is converted into friendly text-stream and this POST method return a new StreamingTextReponse found at the end of file.

About us:

At Thinkthroo, we study large open source projects and provide architectural guides. We have developed reusable Components, built with tailwind, that you can use in your project.

We offer Next.js, React and Node development services.

Book a meeting with us to discuss your project.

Conclusion

This is how you would configure an AI-powered completion. This is definitely worth trying/experimenting.

Important concepts here to remember are configuring an API endpoint, ensuring there’s a rate limit in place based on IP address to prevent spam and how streaming response should be handled.